The conventional wisdom about how to spot a liar is all wrong.

Police thought that 17-year-old Marty Tankleff seemed too calm after finding his mother stabbed to death and his father mortally bludgeoned in the family’s Long Island home. Authorities didn’t believe his claims of innocence, and he spent 17 years in prison for the murders.

Yet in another case, detectives thought that 16-year-old Jeffrey Deskovic seemed too distraught and too eager to help detectives after his high-school classmate was found strangled. He, too, was judged to be lying and served nearly 16 years for the crime.

One person was not upset enough. The other was too upset. How can such opposite feelings both be telltale clues of hidden guilt?

They’re not, says psychologist Maria Hartwig, a deception researcher at John Jay College of Criminal Justice at the City University of New York. Tankleff and Deskovic, both later exonerated, were victims of a pervasive misconception: that you can spot liars by the way they act. Across cultures, people believe that behaviors such as averted gaze, fidgeting, and stuttering can betray deceivers.

In fact, researchers have found little evidence to support this belief—despite decades of searching. “One of the problems we face as scholars of lying is that everybody thinks they know how lying works,” says Hartwig, who co-authored a study of nonverbal cues to lying in the Annual Review of Psychology. Such overconfidence has led to serious miscarriages of justice, as Tankleff and Deskovic know all too well. “The mistakes of lie detection are costly to society and people victimized by misjudgments,” Hartwig says. “The stakes are really high.”

Psychologists have long known how hard it is to spot a liar. In 2003, psychologist Bella DePaulo, now affiliated with the University of California at Santa Barbara, and her colleagues combed through the scientific literature, gathering 116 experiments that compared people’s behavior when lying and when telling the truth. The studies assessed more than 100 possible nonverbal cues, including averted gaze, blinking, talking louder (a nonverbal cue because it doesn’t depend on the words used), shrugging, shifting posture, and movements of the head, hands, arms, or legs. None proved reliable indicators of a liar, though a few were weakly correlated, such as dilated pupils and a tiny increase—undetectable to the human ear—in the pitch of the voice.

Three years later, DePaulo and psychologist Charles Bond of Texas Christian University reviewed 206 studies involving a total of 24,483 observers judging the veracity of 6,651 communications by 4,435 individuals. Neither law-enforcement experts nor student volunteers were able to pick true from false statements better than 54 percent of the time—just slightly above chance. In individual experiments, accuracy ranged from 31 to 73 percent, with the smaller studies varying more widely. “The impact of luck is apparent in small studies,” says Bond. “In studies of sufficient size, luck evens out.”

This size effect suggests that the greater accuracy reported in some of the experiments may just boil down to chance, says psychologist and applied-data analyst Timothy Luke at the University of Gothenburg in Sweden. “If we haven’t found large effects by now,” he says, “it’s probably because they don’t exist.”

Police experts, however, have frequently made a different argument: that the experiments weren’t realistic enough. After all, they say, volunteers—mostly students—instructed to lie or tell the truth in psychology labs don’t face the same consequences as criminal suspects in the interrogation room or on the witness stand. “The ‘guilty’ people had nothing at stake,” says Joseph Buckley, president of John E. Reid and Associates, which trains thousands of law-enforcement officers each year in behavior-based lie detection. “It wasn’t real, consequential motivation.”

Samantha Mann, a psychologist at the University of Portsmouth in the U.K., thought that such criticism by police had a point when she was drawn to deception research 20 years ago. To delve into the issue, she and colleague Aldert Vrij first went through hours of videotaped police interviews of a convicted killer and picked out three known truths and three known lies. Then Mann asked 65 English police officers to view the six statements and judge which were true and which were false. Since the interviews were in Dutch, the officers judged entirely on the basis of nonverbal cues.

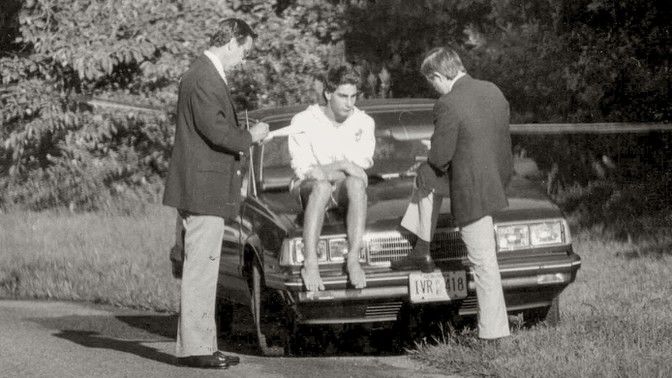

Homicide detectives interview Martin Tankleff outside his home on September 7, 1989

Homicide detectives interview Martin Tankleff outside his home on September 7, 1989

The officers were correct about 64 percent of the time—better than chance, but still not very accurate, she says. The officers who did worst were those who said they relied on nonverbal stereotypes like “liars look away” or “liars fidget.” In fact, the killer maintained eye contact and didn’t fidget while deceiving. “This guy was clearly very nervous, no doubt,” Mann says—but he controlled his behavior to strategically counter the stereotypes.

In a later study, also by Mann and Vrij, 52 Dutch police officers did no better than chance at distinguishing true and false statements given by family members who’d murdered their relatives but denied it in anguished displays during televised press conferences used in the study. Notably, the officers who performed the worst were those who felt that the emotional displays were genuine. But what did that signify? “If a husband killed his wife, he could be upset for all kinds of reasons, like remorse or getting caught,” Mann says. “Nonverbal behavior is so idiosyncratic. If you focus on the nonverbal behavior like emotions, you will trip up.”

Confirming these results on a large scale years later, Hartwig and Bond reviewed the literature for studies comparing people’s abilities to detect high- and low-stakes lies. They found no evidence that people were any better at detecting lies told by criminals or wrongly accused suspects in police investigations than those told by laboratory volunteers.

From serial killers to students fibbing in laboratory experiments, the people in all these experiments spoke their lies out loud. In a study published in 2019, Mann examined nonverbal deceit, such as when someone is trying to conceal illicit activity—a type of deception relevant to detecting bombers or smugglers. She recruited 52 university-student volunteers and asked half of them to transport a laptop said to contain sensitive photographs on a ferry ride between two cities. The volunteers were told to try to blend into the crowd and not look “suspicious,” because people would be trying to identify them. The other half of the group were given a regular cell phone to transport, with no instructions to conceal what they were doing.

When Mann showed videos of the ferry rides to 104 other volunteers and asked them to pick out the “smugglers,” the spotters did no better than chance. In interviews afterward, the “smugglers” said they were nervous, but they consciously tried to act normal and control their nerves with tactics such as listening to music or using their phones.

Next, Mann raised the stakes. Half of a new group of 60 volunteers were given an envelope of Russian, Egyptian, and Korean currency to conceal, while the other half didn’t “smuggle” anything. But this time, Mann sent two researchers onto the ferry to pace around and scrutinize the passengers, appearing to compare their faces to photos on a cell phone.

This time, 120 observers trying to pick out the “smugglers” on video guessed correctly just 39.2 percent of the time—well below chance. The reason, Mann says, is that the “smugglers” consciously made an effort to look normal, while the “innocent” control volunteers simply acted naturally. Their surprise at the unexpected scrutiny looked to the observers like a sign of guilt.

The finding that deceivers can successfully hide nervousness fills in a missing piece in deception research, says psychologist Ronald Fisher of Florida International University, who trains FBI agents. “Not too many studies compare people’s internal emotions with what others notice,” he says. “The whole point is: Liars do feel more nervous, but that’s an internal feeling as opposed to how they behave as observed by others.”

Studies like these have led researchers to largely abandon the hunt for nonverbal cues to deception. But are there other ways to spot a liar? Today, psychologists investigating deception are more likely to focus on verbal cues—and particularly on ways to magnify the differences between what liars and truth-tellers say.

For example, interviewers can strategically withhold evidence longer, letting a suspect speak more freely; this can lead liars into contradictions. In one experiment, Hartwig taught this technique to 41 police trainees, who then correctly identified liars about 85 percent of the time, as compared with 56 percent for another 41 recruits who hadn’t yet received the training. “We are talking significant improvements in accuracy rates,” Hartwig says.

Another interviewing technique taps spatial memory by asking suspects and witnesses to sketch a scene related to a crime or alibi. Because this enhances recall, truth-tellers may report more detail. In a simulated spy mission study published by Mann and her colleagues last year, 122 participants met an “agent” in the school cafeteria, exchanged a code, then received a package. During a sketching interview afterward, participants who were instructed to tell the truth about what happened gave 76 percent more detail about experiences at the location than those asked to cover up the code-package exchange. “When you sketch, you are reliving an event—so it aids memory,” says study co-author Haneen Deeb, a psychologist at the University of Portsmouth.

The experiment was designed with input from U.K. police, who regularly use sketching interviews and work with psychology researchers as part of the nation’s switch to non-guilt-assumptive questioning, which officially replaced accusation-style interrogations in the 1980s and 1990s after scandals involving wrongful conviction and abuse.

In the United States, though, such science-based reforms have yet to make significant inroads among police and other security officials. The U.S. Department of Homeland Security’s Transportation Security Administration, for example, still uses nonverbal deception clues to screen airport passengers for questioning. The TSA’s secretive behavioral screening checklist instructs agents to look for supposed liars’ tells such as an averted gaze—considered a sign of respect in some cultures—and prolonged stare, rapid blinking, complaining, whistling, exaggerated yawning, covering the mouth while speaking, and excessive fidgeting or personal grooming. All have been thoroughly debunked by researchers.

With agents relying on such vague, contradictory grounds for suspicion, it’s perhaps not surprising that passengers lodged 2,251 formal complaints between 2015 and 2018 claiming that they’d been profiled based on nationality, race, ethnicity, or other reasons. Congressional scrutiny of TSA airport screening methods goes back to 2013, when the U.S. Government Accountability Office—an arm of Congress that audits, evaluates, and advises on government programs—reviewed the scientific evidence for behavioral detection and found it lacking, recommending that the TSA limit funding and curtail its use. In response, the TSA eliminated the use of stand-alone behavior-detection officers and reduced the checklist from 94 to 36 indicators, but it retained many scientifically unsupported elements like heavy sweating.

In response to renewed congressional scrutiny, the TSA in 2019 promised to improve staff supervision to reduce profiling. Still, the agency continues to see the value of behavioral screening. As a Homeland Security official told congressional investigators, “common sense” behavioral indicators are worth including in a “rational and defensible security program” even if they don’t meet academic standards of scientific evidence. In a statement to Knowable, TSA media-relations manager R. Carter Langston said that the “TSA believes behavioral detection provides a critical and effective layer of security within the nation’s transportation system.” The TSA points to two separate behavioral-detection successes in the last 11 years that prevented three passengers from boarding airplanes with explosive or incendiary devices.

But, Mann says, without knowing how many would-be terrorists slipped through security undetected, the success of such a program cannot be measured. And, in fact, in 2015 the acting head of the TSA was reassigned after Homeland Security undercover agents in an internal investigation successfully smuggled fake explosive devices and real weapons through airport security 95 percent of the time.

In 2019, Mann, Hartwig, and dozens of other university researchers published a review evaluating the evidence for behavioral-analysis screening, concluding that law-enforcement professionals should abandon this “fundamentally misguided” pseudoscience, which may “harm the life and liberty of individuals.”

Hartwig, meanwhile, has teamed with national-security expert Mark Fallon, a former special agent with the U.S. Naval Criminal Investigative Service and former Homeland Security assistant director, to create a new training curriculum for investigators that’s more firmly based in science. “Progress has been slow,” Fallon says. But he hopes that future reforms may save people from the sort of unjust convictions that marred the lives of Jeffrey Deskovic and Marty Tankleff.

For Tankleff, stereotypes about liars have proved tenacious. In his years-long campaign to win exoneration and more recently to practice law, the reserved, bookish man had to learn to show more feeling “to create a new narrative” of wronged innocence, says Lonnie Soury, a crisis manager who coached him in the effort. It worked, and Tankleff finally won admittance to the New York bar in 2020. Why was showing emotion so critical? “People,” Soury says, “are very biased.”